Visualizing The Exponential Growth In AI Computation

Electronic computers had barely been around for a decade in the 1940s, before experiments with AI began. Now we have AI models that can write poetry and generate images from textual prompts. But what’s led to such exponential growth in such a short time?

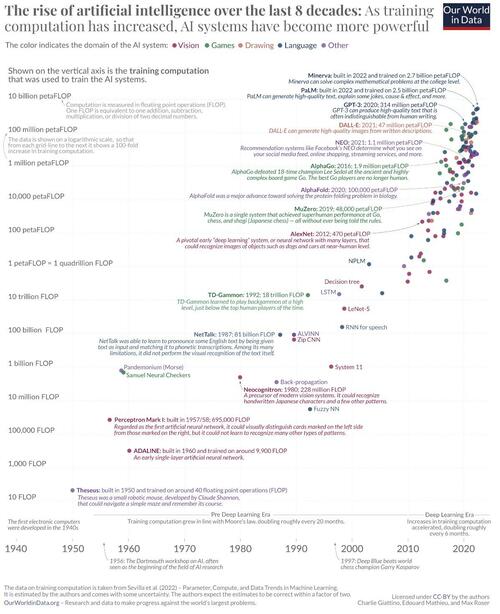

Visual Capitalist’s Pallavi Rao presents the following chart from Our World in Data which tracks the history of AI through the amount of computation power used to train an AI model, using data from Epoch AI.

The Three Eras of AI Computation

In the 1950s, American mathematician Claude Shannon trained a robotic mouse called Theseus to navigate a maze and remember its course—the first apparent artificial learning of any kind.

Theseus was built on 40 floating point operations (FLOPs), a unit of measurement used to count the number of basic arithmetic operations (addition, subtraction, multiplication, or division) that a computer or processor can perform in one second.

ℹ️ FLOPs are often used as a metric to measure the computational performance of computer hardware. The higher the FLOP count, the higher computation, the more powerful the system.

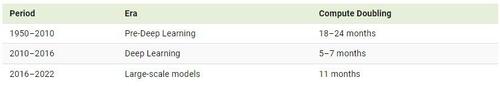

Computation power, availability of training data, and algorithms are the three main ingredients to AI progress. And for the first few decades of AI advances, compute, which is the computational power needed to train an AI model, grew according to Moore’s Law.

Source: “Compute Trends Across Three Eras of Machine Learning” by Sevilla et. al, 2022.

However, at the start of the Deep Learning Era, heralded by AlexNet (an image recognition AI) in 2012, that doubling timeframe shortened considerably to six months, as researchers invested more in computation and processors.

With the emergence of AlphaGo in 2015—a computer program that beat a human professional Go player—researchers have identified a third era: that of the large-scale AI models whose computation needs dwarf all previous AI systems.

Predicting AI Computation Progress

Looking back at the only the last decade itself, compute has grown so tremendously it’s difficult to comprehend.

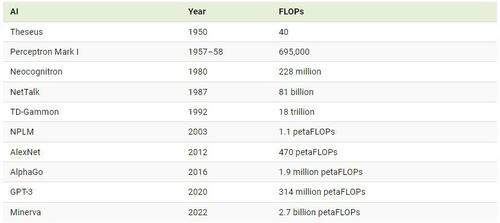

For example, the compute used to train Minerva, an AI which can solve complex math problems, is nearly 6 million times that which was used to train AlexNet 10 years ago.

Here’s a list of important AI models through history and the amount of compute used to train them.

Note: One petaFLOP = one quadrillion FLOPs. Source: “Compute Trends Across Three Eras of Machine Learning” by Sevilla et. al, 2022.

The result of this growth in computation, along with the availability of massive data sets and better algorithms, has yielded a lot of AI progress in seemingly very little time. Now AI doesn’t just match, but also beats human performance in many areas.

It’s difficult to say if the same pace of computation growth will be maintained. Large-scale models require increasingly more compute power to train, and if computation doesn’t continue to ramp up it could slow down progress. Exhausting all the data currently available for training AI models could also impede the development and implementation of new models.

However with all the funding poured into AI recently, perhaps more breakthroughs are around the corner—like matching the computation power of the human brain.

Tyler Durden

Wed, 09/20/2023 – 04:15

via ZeroHedge News https://ift.tt/rLl2tBf Tyler Durden