Instagram Videos Sexualizing Children Shown To Adults Who Follow Preteen Influencers

In June, we noted that Meta’s Instagram was caught facilitating a massive pedophile network, by which the service would promote pedo-centric content to other pedophiles using coded emojis, such as a slice of cheese pizza.

According to the the Wall Street Journal, Instagram allowed pedophiles to search for content with explicit hashtags such as #pedowhore and #preteensex, which were then used to connect them to accounts that advertise child-sex material for sale from users going under names such as “little slut for you.” And according to the National Center for Missing & Exploited Children, Meta accounted for more than 85% of child pornography reports, the Journal reported.

Companies whose ads appeared next to inappropriate content included Disney, Walmart, Match.com, HIms and the Wall Street Journal itself.

And yet, no mass exodus of advertisers…

They haven’t stopped…

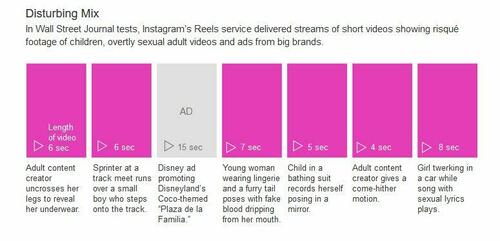

According to a new report by the Journal, Instagram’s ‘reels’ service – which shows users short video clips of things which the company’s algorithm thinks users would find of interest – has been serving up clips of sexualized children to adults that follow preteen influencers, gymnasts, cheerleaders and other categories that child predators are attracted to.

The Journal set up the test accounts after observing that the thousands of followers of such young people’s accounts often include large numbers of adult men, and that many of the accounts who followed those children also had demonstrated interest in sex content related to both children and adults. The Journal also tested what the algorithm would recommend after its accounts followed some of those users as well, which produced more-disturbing content interspersed with ads.

In a stream of videos recommended by Instagram, an ad for the dating app Bumble appeared between a video of someone stroking the face of a life-size latex doll and a video of a young girl with a digitally obscured face lifting up her shirt to expose her midriff. In another, a Pizza Hut commercial followed a video of a man lying on a bed with his arm around what the caption said was a 10-year-old girl. -WSJ

A separate experiment run by the Canadian Centre for Child Protection had similar results after running similar tests.

Meta defended itself, telling the Journal that their tests were a ‘manufactured’ experience that doesn’t represent what billions of users see. That said, they refused to comment on why their algorithms “compiled streams of separate videos showing children, sex and advertisements,” however the company said they gave advertisers tools in October to provide greater control over where their adds appear (so it’s their fault since October?), and that Instagram “either removes or reduces the prominence of four million videos suspected of violating its standards each month.”

The Journal contacted several advertisers whose ads appeared next to inappropriate videos, and several said they were ‘investigating,’ and would pay for brand-safety audits from an outside firm.

Robbie McKay, a spokesman for Bumble, said it “would never intentionally advertise adjacent to inappropriate content,” and that the company is suspending its ads across Meta’s platforms.

Charlie Cain, Disney’s vice president of brand management, said the company has set strict limits on what social media content is acceptable for advertising and has pressed Meta and other platforms to improve brand-safety features. A company spokeswoman said that since the Journal presented its findings to Disney, the company had been working on addressing the issue at the “highest levels at Meta.”

Walmart declined to comment, and Pizza Hut didn’t respond to requests for comment.

Hims said it would press Meta to prevent such ad placement, and that it considered Meta’s pledge to work on the problem encouraging. -WSJ

Meta’s ‘reels’ feature was designed to compete with Chinese-owned TikTok, a video sharing platform owned by Beijing-based ByteDance which also features a nonstop flow of videos posted by others, which are monetized by inserting ads among them.

According to the report, experts on algorithmic recommendation systems said that the Journal‘s testing showed that while gymnasts might appear next to a benign topic, Meta’s behavioral tracking has determined that some Instagram users following preteen girls also want to engage with videos sexualizing children, and then serves those people that content.

Current and former Meta employees said in interviews that the tendency of Instagram algorithms to aggregate child sexualization content from across its platform was known internally to be a problem. Once Instagram pigeonholes a user as interested in any particular subject matter, they said, its recommendation systems are trained to push more related content to them.

Preventing the system from pushing noxious content to users interested in it, they said, requires significant changes to the recommendation algorithms that also drive engagement for normal users. Company documents reviewed by the Journal show that the company’s safety staffers are broadly barred from making changes to the platform that might reduce daily active users by any measurable amount. -WSJ

And despite both the Journal and the Canadian Centre for Child Protection informing Meta in August about their findings, “the platform continued to serve up a series of videos featuring young children, adult content and apparent promotions for child sex material hosted elsewhere.”

So, more catering to pedos. And advertisers don’t seem to care.

What say you, big brands? https://t.co/GKEzn1fqQb

— Elon Musk (@elonmusk) November 28, 2023

Tyler Durden

Tue, 11/28/2023 – 15:50

via ZeroHedge News https://ift.tt/15bQN9P Tyler Durden