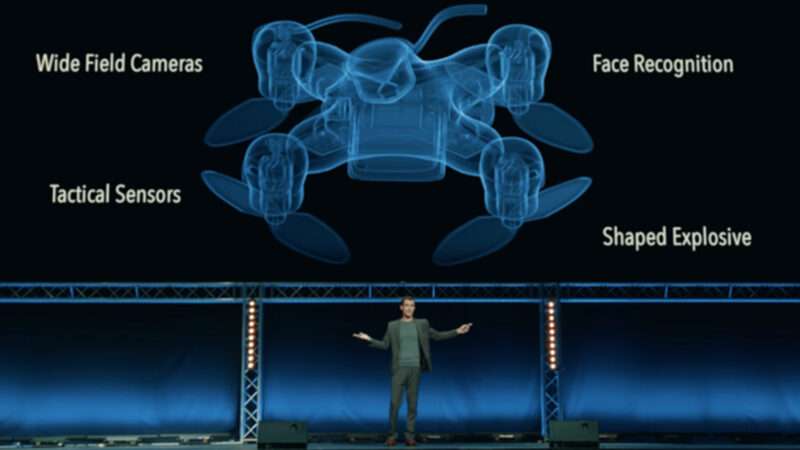

In 2017, the Boston-based Future of Life Institute released a chilling 7-minute arms control video entitled Slaughterbots. It featured swarms of autonomous killer drones using facial recognition technology to hunt down and attack specific human targets. Now, according to a new United Nations (U.N.) report on military activity in war-torn Libya, that fictional scenario may have taken a step towards reality.

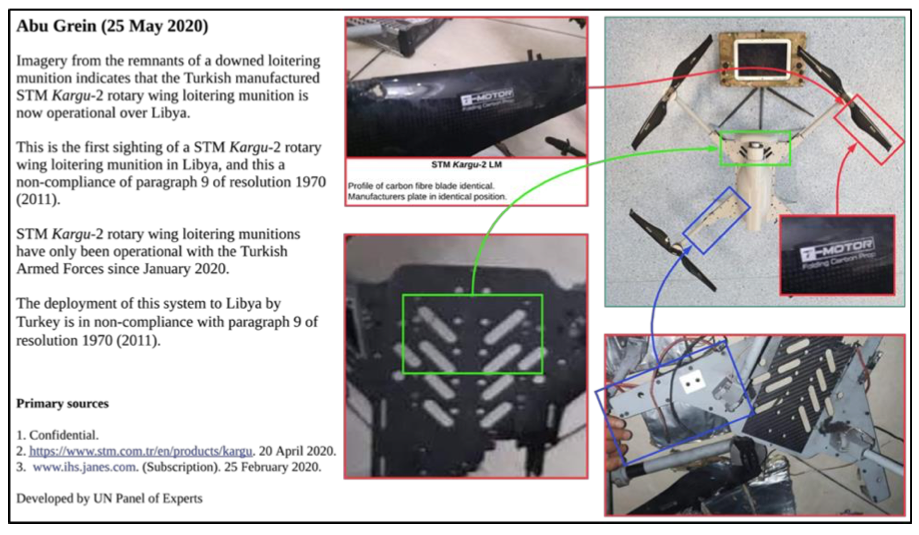

Specifically, the report notes that retreating convoys and troops associated with Libyan strongman Khalifa Haftar “were subsequently hunted down and remotely engaged by the unmanned combat aerial vehicles or the lethal autonomous weapons systems such as the STM Kargu-2 and other loitering munitions.” The report adds that “the lethal autonomous weapons systems were programmed to attack targets without requiring data connectivity between the operator and the munition: in effect, a true ‘fire, forget and find’ capability.” In other words, once they were launched, the drones were programmed to act without further human intervention to identify and attack specific targets.

In the Turkish newspaper Hurriyet, STM CEO Murat Ikinci observed that his company’s kamikaze drones “all have artificial intelligence and have face recognition systems.” Weighing less than 70 kilograms each, they have a range of 15 kilometers and can stay in the air for 30 minutes with explosives. In addition, the Kargu drones can operate as a coordinated swarm of 30 units which cannot be stopped by advanced air defense systems.

The U.N. report notes that making such weaponry available to armed groups in Libya violates the 2011 U.N. Security Council Resolution 1970, which declares that all member states “will immediately take the necessary measures to prevent the direct or indirect supply, sale or transfer…of arms and related materiel of all types, including weapons and ammunition, military vehicles and equipment” to combatants in Libya. Supplying one group in the Libyan conflict with autonomous drones violates that resolution.

In response to the new U.N. report, Future of Life Institute co-founder and Massachusetts Institute of Technology physicist Max Tegmark tweeted, “Killer robot proliferation has begun. It’s not in humanity’s best interest that cheap #slaughterbots are mass-produced and widely available to anyone with an axe to grind. It’s high time for world leaders to step up and take a stand.”

In a 2015 open letter, Tegmark and his colleagues at the Future of Life Institute argued that world leaders should institute a “ban on offensive autonomous weapons beyond meaningful human control.”

Other researchers believe that such a ban would be premature because, they argue, autonomous weapons systems could behave more morally than human warriors do. Nevertheless, Tegmark is correct that the Kargu drone attack in Libya takes the discussion of how to govern warbots from the realm of languid theorizing to urgent reality.

from Latest – Reason.com https://ift.tt/3fHP6gE

via IFTTT